Building an AI powered REST API with Gradio and Hugging Face Spaces – for free!

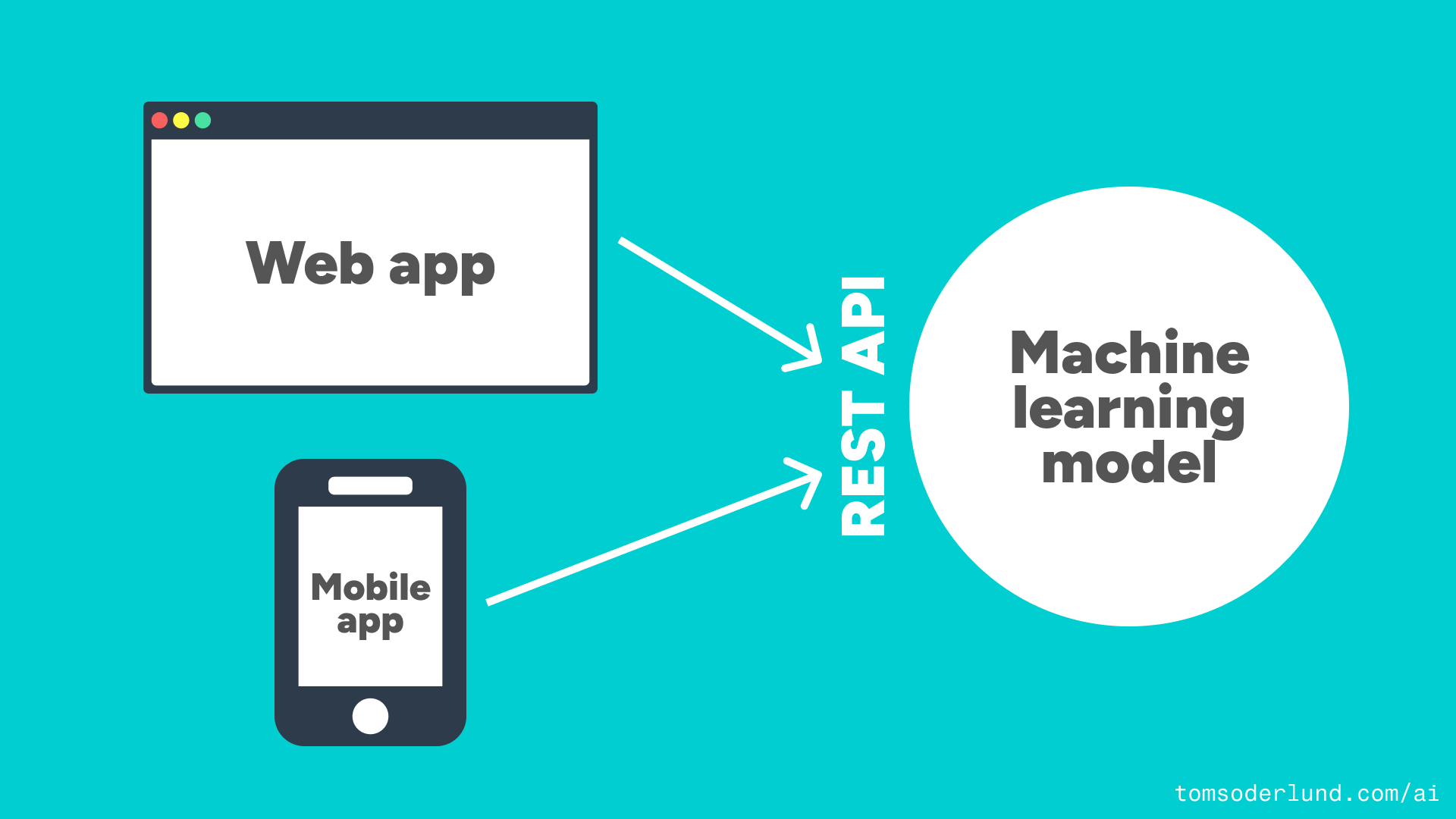

I want to use machine learning models in my web and mobile apps, but to do so I must host my ML app somewhere.

Hosting a pre-trained ML model is called inference. I just want to drop in some Python ML code and quickly get a REST API, but finding a simple way to do this has proven more difficult than I expected.

“I just want to drop in some Python ML code and get a REST API”

There are plenty of hosting providers, including the big ones like Amazon AWS and Google GCP, but the process of using these are complex and often requires building your own Flask REST API.

Luckily, @prundin and @abidlabs had a solution: Gradio and Hugging Face “Spaces”.

New Space on Hugging Face

Hugging Face is like GitHub but for ML models and apps. A “Space” on Hugging Face is an ML app that you can update via Git. Spaces are priced based on CPU type, and the simplest one is free!

Gradio is a library of user interface components (e.g. input fields) for ML apps.

Create a new Space by:

- Go to https://huggingface.co/spaces and click Create new Space.

- Select the OpenRAIL license if you’re unsure.

- Select Gradio as Space SDK.

Local development setup

Go to your development folder and clone your space:

git clone https://huggingface.co/spaces/USER_NAME/SPACE_NAME

cd SPACE_NAME

Set up Python, Gradio, etc:

# Create and activate a “safe” virtual Python environment (exit with command “deactivate”)

python3 -m venv env

source env/bin/activate

# Create a .gitignore file to exclude the packages in `env` folder

echo "env/" >> .gitignore

# Install Gradio

pip3 install gradio

# Update the list of required packages (do this every time you add packages)

pip3 freeze > requirements.txt

Settings in the README file

The README.md contains some crucial settings for your app.

I struggled with some errors in my live environment on Hugging Face, until I realized I had to lock down the Python version to be the same as I use locally. By running:

python3 --version

locally, and then adding the version number to README.md like this:

python_version: 3.9.13

Creating a simple user interface and REST API

Create a blank app.py file:

touch app.py

Edit app.py:

import gradio

def my_inference_function(name):

return "Hello " + name + "!"

gradio_interface = gradio.Interface(

fn = my_inference_function,

inputs = "text",

outputs = "text"

)

gradio_interface.launch()

Upload the files to Hugging Face using Git:

git add .

git commit -m "Creating app.py"

git push

Testing your app on Hugging Face

Believe it or not, but you now have a functional app with a REST API!

Open https://huggingface.co/spaces/USER_NAME/SPACE_NAME in your browser.

See an example here: https://huggingface.co/spaces/tomsoderlund/rest-api-with-gradio

User interface: You can enter something in the name box and press Submit.

REST API interface: At the bottom of the page you have a link called “Use via API”. Click it for instructions, but you can now call your app with REST:

curl -X POST -H 'Content-type: application/json' --data '{ "data": ["Jill"] }' https://USER_NAME-SPACE_NAME.hf.space/run/predict

The REST API would return:

{

"data":[

"Hello Jill!"

],

"is_generating":false,

"duration":0.00015354156494140625,

"average_duration":0.00015354156494140625

}

Testing your app locally

Locally, you can run:

python3 app.py

You can now test your app interactively on: http://127.0.0.1:7860/ and access the REST API on http://127.0.0.1:7860/run/predict

(You need to stop (Ctrl+C) and restart your app when you modify app.py)

Going further

You can now explore all the models on Hugging Face, including Stable Diffusion 2 and GPT-Neo, and add them to your Spaces app.

See a complete ML example on: https://huggingface.co/spaces/tomsoderlund/swedish-entity-recognition – you can see how few lines of code are actually needed to invoke the ML model.